Translate this page into:

Outcomes in virtual reality knee arthroscopy for residents and attending surgeons

2 Department of Simulation, ABC Medical Center, Mexico City, México

Corresponding Author:

Claudia Arroyo-Berezowsky

Av. Vasco de Quiroga 4299 Office 1003 Lomas de Santa Fe, 05348 Cuajimalpa de Morelos, Mexico City

México

dra.carroyob@gmail.com

| How to cite this article: Arroyo-Berezowsky C, Torres-Gómez A, Romo-Rodríguez R, Ruiz-Speare JO. Outcomes in virtual reality knee arthroscopy for residents and attending surgeons. J Musculoskelet Surg Res 2019;3:189-195 |

Abstract

Objectives: Arthroscopic surgery is one of the most common procedures in orthopedic surgery. It is prone to simulation training, which has consistently shown improvement in the trainees' motor skills. The objective of this study was to compare the differences in absolute values for virtual reality diagnostic knee arthroscopy with and without a probe for residents and attending surgeons at our hospital. Moreover, quantify the difference in results between an initial and final assessment for all participants. Methods: Eighteen residents and twenty attending orthopedic surgeons completed a sequence of exercises that included a diagnostic knee arthroscopy with and without a probe on the ARTHRO Mentor™ virtual reality simulator. The variables analyzed were as follows: time to complete the task, distance traveled by the arthroscope and the probe, arthroscope and probe roughness, and overall task score. We compared residents' scores with attending surgeons' scores and quantified the difference in all participants' results for the initial and final assessment. Results: There was no statistically significant difference in results between residents and attending surgeons. There was a statistically significant improvement in some variables for the knee diagnostic arthroscopy without a probe for all participants in the final assessment. Conclusions: There were no differences between attending surgeons and residents in the virtual reality knee diagnostic arthroscopy with and without a probe. There was an improvement in some variables for the knee diagnostic arthroscopy without a probe for all the participants. With constant training, anyone can improve their simulation motor skills. External validation studies are necessary.Introduction

Residency programs worldwide have conventionally followed a one-to-one apprenticeship model. This has changed over the past decades, particularly in orthopedics due to the restriction in resident working hours in some countries, more complex procedures, an increased risk of lawsuits, and increasing operating room (OR) cost.[1],[2],[3],[4],[5],[6] To face these restrictions, the United States[7] recently incorporated simulation to the residency curricula for the postgraduate 1 year (PGY1) residents and the United Kingdom[8] is working on a curriculum for orthopedic education that evaluates, among other things, motor skill development known as the Orthopedic Competency Assessment Program.[8]

Simulation in medicine has been defined as any technology or process that recreates a contextual background and hence that the trainee can experience an error and receive feedback in a secure environment.[3] Arthroscopic surgery is one of the most common procedures in orthopedic surgery, with highly demanding technical aspects. It requires visuospatial coordination to manipulate instruments while interpreting three-dimensional (3D) images in 2D. This makes it prone to simulation training.[1] Simulation in arthroscopy has consistently shown improvement in trainees' motor skills. However, there are currently no formal plans for compulsory simulation training, and it is not yet widely implemented into residency and fellowship training.[5],[9],[10],[11],[12],[13],[14]

Internal validation for simulators is the capability of the simulator to differentiate between a novice and an experienced surgeon, and this has been the most studied aspect of arthroscopic simulators up until now. Most studies have assessed abilities in one moment in time, and only a few have attempted to test the transferability of motor skills acquired with the simulator to the OR. This is known as external validation.[9],[15],[16]

The main objective of this study was to compare resident's and attending surgeons' scores before and after completing a sequence of exercises on the knee arthroscopic virtual reality simulator ARTHRO Mentor™, from Simbioni × 3D Systems (Cleveland, Ohio) that included a knee diagnostic arthroscopy with and without a probe.

Materials and Methods

All residents who were enrolled in our hospital's orthopedic residency program, including the ones who would be starting on March were invited to participate. Every orthopedic attending surgeon working at our hospital was also invited to participate. Eighteen residents accepted the invitation (all four new residents, four PGY1, four PGY2, four PGY3, and only two PGY4) and twenty attending surgeons (ten who were classified as arthroscopists and ten who were not classified as arthroscopists according to the Arthroscopy Association of North America's criterion for arthroscopist). Demographic information for all participants were recorded, including hand dominance and videogame use.

For the simulation part, we used the ARTHRO Mentor™, located in our hospital's simulation training center. It is a virtual reality simulator that provides haptic feedback when instruments come in contact with an anatomic structure inside the knee. This means that the user will feel resistance while handling the instruments during an arthroscopy. The model was a right knee and the instrument's positions were fixed: the arthroscope was always handled with the left hand and the probe with the right hand, regardless of the participant's handedness.

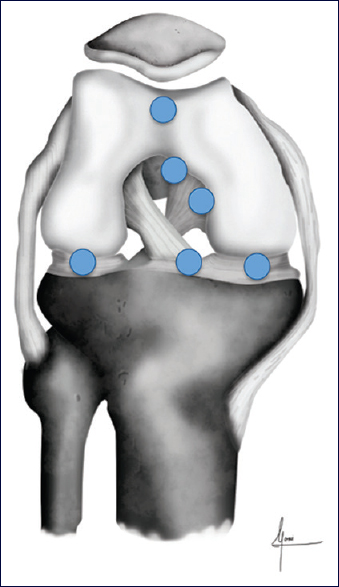

All the participants signed an informed consent to allow the use of data collected during the study. Every participant completed the same sequence of exercises on the ARTHRO Mentor™. It consisted of four basic tasks that form part of the Fundamentals of Arthroscopic Surgery Training (FAST), known as the FAST program, to learn how to navigate and triangulate with the simulator. They also completed a knee diagnostic arthroscopy with and without a probe with a random path and a knee diagnostic arthroscopy with and without a probe with a set path. In the knee arthroscopies, they had to locate- and touch, if they were using the probe-a series of blue spheres distributed throughout the knee. Every time the user repeats the excercise in the random path arthroscopies, the spheres appear in a different, non - predictable order. In the set path exercises, they appeared in a specific order every time the exercise was repeated. Each exercise was completed three times for a total of twelve repetitions. The positions of the spheres can be observed in [Figure - 1] and [Figure - 2].

|

| Figure 1: Sphere positions inside the right knee for the diagnostic knee arthroscopy without a probe. The blue spheres represent positions inside the knee that need to be found by the participant |

|

| Figure 2: Sphere positions inside the right knee for the diagnostic knee arthroscopy with a probe. The blue spheres represent positions inside the knee that need to be found by the participant |

There was no time limit for completion and verbal coaching regarding the position of the spheres was available to all participants. A diagram with the location of the spheres and previous demonstration was given to the new residents who had never been in contact with knee arthroscopy.

The variables recorded were the ones measured by the ARTHRO Mentor™ for each exercise. These were: time to complete the exercise (which was converted from minutes to seconds), distance traveled by the arthroscope and the probe (measured in mm), arthroscope and probe roughness measured in N (which represents the number of collisions of an instrument with a structure within the knee), and a global score (ranging from zero to ten possible points).

The knee arthroscopies with and without a probe with a random path were considered the initial assessments, and knee arthroscopies with and without a probe with a set path were considered the final assessments. We gathered results for both the initial and final assessments for both residents and attending surgeons.

We first analyzed the difference between residents and attending surgeons in each assessment. Statistical analysis consisted of normality tests for continuous variables (Kolmogorov–Smirnov and Shapiro–Wilk test). Parametric variables were described as medians (standard deviation) and nonparametric variables as means (interquartile range, minimum-maximum). Comparisons between parametric numeric variables were made with Student's t test for the parametric and of Mann–Whitney U test for the nonparametric. Medians are reported with a 95% confidence interval. A two-tailed P < 0.05 was considered statistically significant. Then, we calculated the differences between the final and initial assessment results for all the participants as a single group. Differences are reported as an absolute value from means or medians.

Results

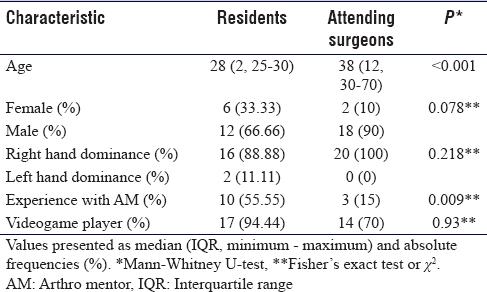

Demographic information can be observed in [Table - 1] and [Table - 2]. Eighteen residents participated and twenty attending surgeons (ten arthroscopists and ten nonarthroscopists). The participants were on average 31 years of age, there were only eight women (six residents and two attending surgeons), 94.7% of participants were right-handed, and 81.6% of all participants played video games (94.44% or residents and 70% of attending surgeons). Of all participants, 34.2% had previous experience using the simulator (55.55% of the resident group and 15% of the attending surgeon group) P < 0.009. Residents were on average 28 years of age and attending surgeons were 38 years of age (P < 0.001), 90% of attending surgeons and 66.66% of residents were male. There were two left-handed residents and the rest were right-handed.

When comparing resident and attending surgeon scores for the initial assessment in arthroscopy without a probe, there were no statistically significant differences between them though attending surgeons tended to have better scores in time to complete the exercise (322 s vs. 341.67 s, P = 0.869) and roughness with the arthroscope (30 vs. 31.33, P = 0775). Meanwhile, residents had better results in the distance traveled with the arthroscope (19088.67 mm vs. 2323.50 mm, P = 0.517) and a better overall score (3.28 vs. 2.75, P = 0.619). For the final assessment in knee arthroscopy without a probe, there were also no statistically significant differences between residents and attending surgeons. Residents tended to have better results in time to complete the exercise (165 s vs. 219 s, P = 0.800), they traveled less distance with the arthroscope (952.33 mm vs. 1234.33 mm, P = 0.845), and scored slightly higher in the global score than attending surgeons (6.95 vs. 6.94, P = 0.798). Attending surgeons still had better scores in roughness (17.33 N vs. 19 N, P = 0.845) than residents. These results can be observed in [Table - 3].

For the initial assessment in knee arthroscopy with a probe, there were also no statistically significant differences in results. Residents had better scores in the distance traveled with the arthroscope (421 mm vs. 507.50 mm, P = 0.390) and the probe (1323 mm vs. 1381 mm, P = 0.775), roughness with the probe (8.67 N vs. 9.33 N, P = 0.845), and better overall score (7.04 vs. 6.93, P = 0.845) than attending surgeons. For the final assessment, attending surgeons had better results in time to complete the exercise, (137.66 s vs. 177 s, P = 0.460) roughness with the arthroscope (5.66 N vs. 6 N, P = 0.821), distance traveled with the probe (1034.67 mm–1559 mm, P = 0.177), and a higher overall score (7.57 vs. 6.86, P = 0.270). These results can be observed in [Table - 4].

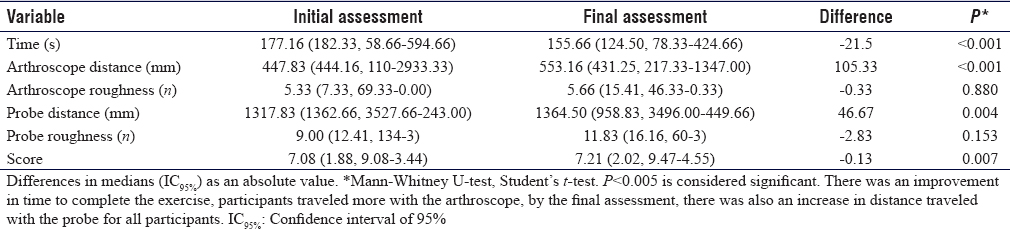

We calculated the difference in results for all participants in the knee arthroscopy without a probe. We found a statistically significant improvement in the time to complete the exercise (initial assessment 333.33 s to 230.10 s, P < 0.001), roughness with the arthroscope (initial assessment 30.16 N to 17.83 N, P < 0.001), and total global score (3.13–6.96, P < 0.001) [Table - 5]. When we analyzed the same difference in score for the knee diagnostic arthroscopy with a probe, we found that there was some improvement in the time to complete the exercise (177.16 s to 155.66 s, P < 0.001), but participants traveled more distance with the arthroscope (447.83 mm to 553.16 mm, P < 0.001) and with the probe (1317.83–1364.50 mm, P < 0.004) and this was also statistically significant. For the final assessment, the use of both the arthroscope (5.33 N to 5.66 N, P = 0.880) and the probe (9.00–11.83 N, P = 0.153) was rougher than on the initial assessment, and the overall score was slightly higher, although these changes were not statistically significant. These results can be seen in [Table - 6].

Discussion

A systematic review conducted by Morgan et al. found that out of 76 studies involving simulation in orthopedics, 47 were validation studies. The most studied kind of validation was construct (internal) validation. Construct validation is useful to differentiate between the levels of surgical ability. Sixty-two percentage of the construct validation studies were for arthroscopy, and most of these were knee arthroscopy studies.[15] Hetaimish et al. also found in a systematic review that the most commonly studied and reported type of validity was construct validity in 85% of studies. Fifty-four percent of the studies evaluated the internal validity of simulators. External validity (or surgical ability of transferability to the OR) was reported in 62% of studies. Twenty-three percentage of studies investigated the transferability of simulator abilities to the OR and 46% studied transferability of abilities between different simulators.[16]

Most studies with arthroscopy simulators have focused on the internal validation (or construct validation) of simulators and their capacity to improve resident's arthroscopic abilities. It has been demonstrated that less experienced users tend to have worse scores than more experienced ones.[9],[17],[18],[19],[20],[21],[22],[23],[24]

We could wonder if internal validation studies in simulators have really validated pure motor skills or the overall ability of the surgeon to complete a knee arthroscopy. Since conditions regarding the knowledge of the simulator, how the exercise is structured and how to get to the spheres was homogenized with verbal coaching to the extent that we did not see a statistically significant difference in pure motor skills.

We did not find a statistically significant difference between both groups in the present study, which could mean we have not internally validated the ARTHRO Mentor™ at our hospital. However, since there have been many publications that prove internal validity, we assume its capacity to differentiate between ability levels.[6],[22],[23] This can be explained because this study design had certain differences to most published ones. These differences were that there was no time limit and the objective was that all participants completed the whole sequence. Verbal coaching was available to all participants to help locate the spheres. While the effect of coaching was not measured, subjectively, residents and nonarthroscopists could have taken longer to complete the exercise. Participants spent between 90 and 120 min working on the same task, while the order that the spheres appeared is changed, the exercises were practically the same.

In a systematic review by Tay et al. about simulators in arthroscopy, the most reported variable in all studies was the completion time for the exercise. The next most reported variable was movement economy (traveled distance with arthroscope and probe). The number of collisions with the cartilage reported as roughness was the less reported variable. They found only one study that demonstrated concurrent validity (to what extent do the results of the simulator correlate with the gold standard of the domain).[25] In most internal validation studies, it has been concluded that the movement analysis (especially traveled distance by the instruments and completion time) can discriminate between expert and novice surgeons.[16],[19],[24] We did not find important differences between our two groups, but we noticed an improvement in the time to complete the exercise, roughness with the arthroscope, and total score. Based on this, we could assume that our participants could go from novice to expert in a simulated knee arthroscopy after 90–120 min of continued practice. Although this does not mean that they became experts in knee arthroscopy after this time, motor training and constant practice can surely be beneficial.

Most studies report standard deviations and differences between groups, but almost no study reported the absolute values for any variable studied.[21],[26],[27] As far as we can tell, there are no reference values to determine who is considered an expert and who is considered novice based on the simulation values or set reference value to assure competency. The other only study we found where actual values are reported is the one published by Jacobsen et al.[19] They had a group of residents and experienced surgeons perform different exercises on the virtual reality simulator, two of them were knee diagnostic arthroscopy with and without a probe, similar to this study. The difference is that they only completed the exercise one time, while in this study, there was an initial assessment consisting on a sequence of three repetitions for each exercise, and a final assessment also consisting of three repetitions for each exercise. While they report medians and standard deviations and we report means and interquartile differences, we found certain differences in reported values.[19] There was an overall improvement in most variables in our study groups compared to Jacobsen's groups after practicing for about 90–120 min in this study which also supports the idea that with enough practice not only residents can improve their motor skills. We believe simulation can be beneficial even for established surgeons as a means to improve their skills. It could also work as a recertification tool.

Although there are no established reference values to determine a certain level of proficiency in simulated knee arthroscopy, Jacobsen et al.[19] established a pass–fail system with standard deviations in their study. This needs to be further explored and we believe researchers need to start reporting values besides standard deviations to make a pooled effort to establish reference values.

In general, arthroscopy simulator studies have found that there is a significant improvement in resident's surgical abilities after the use of high- and low-fidelity simulators. For the time being, no study that we know has been able to determine if a high-fidelity simulator is better than a low-fidelity simulator for the acquisition of surgical skills. There have also been inconsistencies in the way of evaluating and reporting performance on the simulators.[16],[20],[21],[22],[23],[24]

In a recent study by Marcheix et al. conducted over a 4-month period comparing residents who were trained on a virtual reality simulator and controls who were experienced surgeons, they found worse scores in pretest evaluation for residents than for controls. This difference, however, was not present after 12 weeks of regular use of the simulator by residents.[28] Another study showed that training for 5 h over 1-week period is significant to improve the skills of an inexperienced surgeon.[26] These findings are similar to ours to a certain extent, although practice time was close to 2 h instead of 5 h over a week in this study. When we analyzed the difference between scores during the initial assessment and the final assessment for the knee arthroscopy without a probe, we found an improvement in time to complete the exercise, roughness with arthroscope, and score for all participants. This did not mirror the results of the knee arthroscopy with the probe where only time to complete exercise improved. Still, we believe this shows that practice helps improve the results in knee diagnostic arthroscopy with and without a probe. If participants are conscious of what each variable represents and practice enough, they can achieve better results. We also believe that practicing using a simulator could be beneficial even for established orthopedic surgeons or surgeons who are not as familiar with arthroscopy. Assessment with a simulator could even help with board certification and competency evaluations for surgeons of all levels of expertise.

As part of this study, on a previously published article, we used the final assessment results for residents and attending surgeons to establish reference values as base line controls for follow-up for a training program.[27] Still, as we discussed earlier, global scores were not high, and we believe they can be improved and used for competency-based evaluation. For evaluating competency for the Da Vinci robot, Raison et al. proposed expert competency levels of >75% based on a percentage score related to an established mean expert score as suitable for expert practitioners.[29] We believe this could be a good way to set competency levels with arthroscopic simulators; however, mean expert scores and absolute values need to be established in the literature.

This is the first study done in Mexico with a virtual reality arthroscopy simulator. There are a few limitations and differences with other studies. First, the sample size was small since there are few residents in each academic year, and we had few attending surgeons participated in the study. We grouped the arthroscopist and nonarthroscopist attending surgeons in a single group because they were small groups each and we could not do a significant analysis by subgroups. In other studies, participants have had a limited amount of time to practice and complete the evaluation. In this study, there was no time limit, and hence the completion time of the whole sequence ranged between 90 min and almost 120 min. We also provided verbal coaching to help find the spheres. We believe this is useful to help homogenize the groups and eliminate differences that account for interphase use, knowledge of the virtual reality program, and small technical differences on how to perform an arthroscopy, especially with younger residents (flexing and extending the knee to reach certain areas in the knee or applying varus or valgus stress). Residents from PGY2 to PGY4 were familiar with the ARTHRO Mentor™ almost none had had a lot of practice. Only three attending surgeons had previously used the ARTHRO Mentor™.

Since we observed an improvement after practicing and repeating the same exercises in residents and attending surgeons, we believe practice and simulation could be helpful to further develop anyone's basic motor skills for performing arthroscopy. These results, together with the actual available literature encourage us to start a training program incorporating simulation for motor arthroscopic competency added to the already theoretical classes residents have over the academic year.

The next steps in the investigation with simulation training are to standardize the assessment of simulation arthroscopy validation, set reference values for competency and prove that practicing using the arthroscopy simulator can lead to improvement in a real arthroscopy – that is to prove external validation.

Conclusions

We did not find any statistically significant differences between residents' and attending surgeons' scores for the initial and final assessment in knee arthroscopy with and without a probe. We did observe an improvement in time, arthroscope roughness, and overall score for everyone in knee arthroscopy without a probe after practicing for a period of time. We believe that with enough practice, it is possible for all users to improve their performance in knee arthroscopy. External validation studies are needed for virtual reality simulators and reference values need to be standardized to establish an objective level of competency in the simulator.

Ethical consideration

This study was approved by the investigation board at our hospital and every participant signed an informed consent.

Acknowledgments

We would like to thank all the residents and staff members that participated in the study, the simulation center personnel who was very helpful, and graphic designer Mónica Arroyo Berezowsky for her illustrations of the knee.

Financial support and sponsorship

Nil.

Conflicts of interest

There are no conflicts of interest.

Authors' contributions

CAB contributed with concepts, design, literature search, data acquisition and analysis, manuscript preparation, editing, and review. ATG contributed with concept design, data and statistical analysis, and manuscript review. RRR contributed with concepts, design, manuscript editing, and review. JOR contributed with concepts, data acquisition, manuscript review, and acted as guarantor. All authors have critically reviewed and approved the final draft and are responsible for the content and similarity index of the manuscript.

| 1. | Hodgins JL, Veillette C. Arthroscopic proficiency: methods in evaluating competency. BMC Med Educ 2013;13:61. [Google Scholar] |

| 2. | Unalan PC, Akan K, Orhun H, Akgun U, Poyanli O, Baykan A, et al. A basic arthroscopy course based on motor skill training. Knee Surg Sports Traumatol Arthrosc 2010;18:1395-9. [Google Scholar] |

| 3. | Stirling ER, Lewis TL, Ferran NA. Surgical skills simulation in trauma and orthopaedic training. J Orthop Surg Res 2014;9:126. [Google Scholar] |

| 4. | Rosen KR. The history of medical simulation. J Crit Care 2008;23:157-66. [Google Scholar] |

| 5. | Hui Y, Safir O, Dubrowski A, Carnahan H. What skills should simulation training in arthroscopy teach residents? A focus on resident input. Int J Comput Assist Radiol Surg 2013;8:945-53. [Google Scholar] |

| 6. | Akhtar K, Sugand K, Wijendra A, Standfield NJ, Cobb JP, Gupte CM. Training safer surgeons: How do patients view the role of simulation in orthopaedic training? Patient Saf Surg 2015;9:11. [Google Scholar] |

| 7. | Dougherty PJ. CORR curriculum - orthopaedic education: Faculty development begins at home. Clin Orthop Relat Res 2014;472:3637-43. [Google Scholar] |

| 8. | Pitts D, Rowley DI, Sher JL. Assessment of performance in orthopaedic training. J Bone Joint Surg Br 2005;87:1187-91. [Google Scholar] |

| 9. | Lopez G, Martin DF, Wright R, Jung J, Hahn P, Jain N, et al. Construct Validity for a Cost-effective Arthroscopic Surgery Simulator for Resident Education. J Am Acad Orthop Surg 2016;24:886-94. [Google Scholar] |

| 10. | Hiemstra E, Kolkman W, Wolterbeek R, Trimbos B, Jansen FW. Value of an objective assessment tool in the operating room. Can J Surg 2011;54:116-22. [Google Scholar] |

| 11. | Gardner AK, Scott DJ, Pedowitz RA, Sweet RM, Feins RH, Deutsch ES, et al. Best practices across surgical specialties relating to simulation-based training. Surgery 2015;158:1395-402. [Google Scholar] |

| 12. | Martin MK, Patterson DP, Cameron KL. Arthroscopic Training Courses Improve Trainee Arthroscopy Skills: A Simulation-Based Prospective Trial. Arthroscopy 2016. 2016;32:2228-32. [Google Scholar] |

| 13. | Niitsu H, Hirabayashi N, Yoshimitsu M, Mimura T, Taomoto J, Sugiyama Y, et al. Using the Objective Structured Assessment of Technical Skills (OSATS) global rating scale to evaluate the skills of surgical trainees in the operating room. Surg Today 2013;43:271-5. [Google Scholar] |

| 14. | Koehler RJ, Amsdell S, Arendt EA, Bisson LJ, Braman JP, Bramen JP, et al. The Arthroscopic Surgical Skill Evaluation Tool (ASSET). Am J Sports Med 2013;41:1229-37. [Google Scholar] |

| 15. | Morgan M, Aydin A, Salih A, Robati S, Ahmed K. Current Status of Simulation-based Training Tools in Orthopedic Surgery: A Systematic Review. Journal of Surgical Education 2017:74:698-716. [Google Scholar] |

| 16. | Hetaimish B, Elbadawi H, Ayeni OR. Evaluating Simulation in Training for Arthroscopic Knee Surgery: A Systematic Review of the Literature. Arthroscopy 2016;32:1207-20. [Google Scholar] |

| 17. | Slade Shantz JA, Leiter JRS, Gottschalk T, MacDonald PB. The internal validity of arthroscopic simulators and their effectiveness in arthroscopic education. Knee Surgery, Sports Traumatology, Arthroscopy 2014;22:33-40. [Google Scholar] |

| 18. | Camp CL, Krych AJ, Stuart MJ, Regnier TD, Mills KM, Turner NS. Improving Resident Performance in Knee Arthroscopy: A Prospective Value Assessment of Simulators and Cadaveric Skills Laboratories. J Bone Joint Surg Am 2016;98:220-5. [Google Scholar] |

| 19. | Jacobsen ME, Andersen MJ, Hansen CO, Konge L. Testing basic competency in knee arthroscopy using a virtual reality simulator: exploring validity and reliability. J Bone Joint Surg Am 2015;97:775-81. [Google Scholar] |

| 20. | Martin KD, Belmont PJ, Schoenfeld AJ, Todd M, Cameron KL, Owens BD. Arthroscopic basic task performance in shoulder simulator model correlates with similar task performance in cadavers. J Bone Joint Surg Am 2011;93:e1271-5. [Google Scholar] |

| 21. | Coughlin RP, Pauyo T, Sutton JC 3rd, Coughlin LP, Bergeron SG. A Validated Orthopaedic Surgical Simulation Model for Training and Evaluation of Basic Arthroscopic Skills. J Bone Joint Surg Am 2015;97:1465-71. [Google Scholar] |

| 22. | Garfjeld Roberts P, Guyver P, Baldwin M, Akhtar K, Alvand A, Price AJ, et al. Validation of the updated ArthroS simulator: face and construct validity of a passive haptic virtual reality simulator with novel performance metrics. Knee Surgery, Sports Traumatology, Arthroscopy 2016:1-10. [Google Scholar] |

| 23. | Howells NR, Gill HS, Carr AJ, Price AJ, Rees JL. Transferring simulated arthroscopic skills to the operating theatre: a randomised blinded study. J Bone Joint Surg Br 2008;90:494-9. [Google Scholar] |

| 24. | Boutefnouchet T, Laios T. Transfer of arthroscopic skills from computer simulation training to the operating theatre: a review of evidence from two randomised controlled studies. SICOT J 2016;2:4. [Google Scholar] |

| 25. | Tay C, Khajuria A, Gupte C. Simulation training: A systematic review of simulation in arthroscopy and proposal of a new competency-based training framework. International Journal of Surgery 2014;12:626-33. [Google Scholar] |

| 26. | Andersen C, Winding TN, Vesterby MS. Development of simulated arthroscopic skills. Acta Orthop 2011; 82:90-5. [Google Scholar] |

| 27. | Arroyo BC, Torres GA, Romo RR, Ruiz SJ. Reference values for knee diagnostic arthroscopy in a virtual reality simulator. An Med Asoc Med Hosp ABC 2017;62:22-9. [Google Scholar] |

| 28. | Marcheix PS, Vergnenegre G, Dalmay F, Mabit C, Charissoux JL. Learning the skills needed to perform shoulder arthroscopy by simulation. Orthop Traumatol Surg Res 2017;103:483-8. [Google Scholar] |

| 29. | Raison N, Ahmed K, Fossati N, Buffi N, Mottrie A, Dasgupta P, et al. Competency based training in robotic surgery: Benchmark scores for virtual reality robotic simulation. BJU International 2017;119:804-11. [Google Scholar] |

Fulltext Views

4,561

PDF downloads

1,487